Artificial intelligence has made remarkable progress in the past decade. This has resulted in significant increases in AI training costs and carbon emissions that continue to negatively affect the environment.

To understand how the training and usage of AI systems produce carbon emissions, it is important to note that the amount of carbon emitted is directly proportional to the energy or electricity consumed by running specialized hardware for AI training or for data centre cooling. The impact of AI systems on the environment can be understood by dividing the AI development process into three stages:

- Stage 1 – Data collection: Massive amounts of data are needed to train AI systems, which are stored in data centres around the world. These data centres consume massive amounts of energy and produce carbon emissions.

- Stage 2 – AI training: Specialized hardware and cooling systems, like GPU workstations, are required for training AI systems, which consume energy and produce varying levels of carbon emissions.

- Stage 3 – AI Deployment: Large-scale inference workloads need to be deployed on big populations, for example deploying to IoT devices or the millions that use ChatGPT, that produce significant carbon emissions.

With AI systems becoming more widely used, the focus on obtaining more accurate models dominates the AI field with little consideration for cost, efficiency, and environmental impact. Research has shown how the computations required for deep learning research have been doubling every few months, resulting in an estimated 300,000x times increase from 2012 to 2018 and have a surprisingly large carbon footprint (Schwatrz et al. 2019).

With support from UBC’s Green Labs Fund, Dr Xiaoxiao Li, Assistant Professor at the Department of Electrical and Computer Engineering, along with Jane Shi, a Master's student in Electrical and Computer Engineering, have set up a project that aims to investigate how different machine learning (ML) setups will affect carbon emissions. Their project utilizes tools that can measure the energy consumption of running an ML model and use the carbon intensity of a given area to calculate the carbon footprint.

Their experiments have shown that there is a positive correlation between ML model runtime and carbon emissions, and that larger batch sizes (of ML setups) reduce emissions but there is a trade-off with model accuracy. They also conducted experiments in quantization, a technique that can result in a 4x reduction in model sizes and enable them to run inferences faster. This can result in a reduction in AI computation demands and increase power efficiency.

To extend the impact from lab to university, Dr. Li and Jane have also collaborated with UBC ARC (Advanced Research Computing) to integrate a power consumption monitoring tool as a Slurm IPMI module to track the power usage values for all the computation nodes across the Sockeye High-Performance Cluster (HPC). This has enabled them to collect data on energy consumption and carbon emissions of computation jobs submitted to Sockeye, conduct data analysis, and propose best practices to reduce the carbon footprint of training ML models.

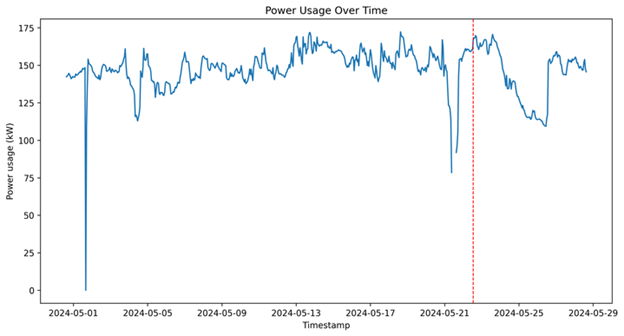

A visualization of the power usage trend in May, 2024 is shown below, with the 2 drops in the middle (May 1st and 21st) showing periods of maintenance. From the data, they calculated the energy consumption of Sockeye for a month, which is approximately 100 MWh. Interestingly, another observation was made regarding a rapid drop in the power usage after May 22nd. They hypothesize that this was due to a paper deadline of a major ML conference on that day (as marked by the red dashed line), as ML researchers need to run a lot of experiments on Sockeye in the few weeks leading up to the deadline and hopefully taking a break shortly after that.

By analyzing the hourly power usage pattern for each day, the team found that the period of the largest relative power usage is from 2 pm to 11 pm, with 12 am to 7 am being the second most active period, and 8 am to 11 am being the least active period.

We would like to thank Dr Xiaoxiao Li and Jane Shi for their incredible work. You can learn more about this project – along with a handful of the other numerous projects supported by the Green Labs Fund since its inception in 2009 – by watching the recorded event The Laboratories of Tomorrow: A Sustainability Showcase. More information about the Green Labs Fund can be found on our webpage: Green Labs Fund.